- Executive Resilience Insider

- Posts

- The AI implementation myth costing you market position

The AI implementation myth costing you market position

Everyone believes faster adoption = competitive advantage. Fortune 500 data proves the opposite. Five tension management systems that actually work.

Despite record AI investment, a market analysis shows a key issue: execs prioritize quick adoption, while competitors win by managing systemic friction, not just by using advanced tools.

Fortune 500 implementation data exposes systematic failure: 80% of AI projects fall short of initial productivity objectives. Companies accelerate tool rollouts and celebrate usage metrics while missing the integration frameworks that determine competitive survival.

Cross-sector analysis spanning 100+ leaders reveals strategic miscalculation:

Companies accelerating AI tool rollouts while missing integration frameworks that create positioning advantages

Leadership teams optimizing deployment metrics while tension-aware competitors establish sustainable market capture

Executives celebrating usage statistics while systematic tension management determines competitive survival

The AI Implementation Paradox:

Tool deployment velocity ↑ = Competitive positioning ↓

Adoption metrics sophistication ↑ = Integration effectiveness ↓

Usage acceleration ↑ = Strategic advantage deterioration ↑

Systematic tension management generates competitive multipliers faster than deployment theater creates market positioning.

Organizations have 90 days to build comprehensive integration intelligence or surrender advantages to tension-enabled competitors who understand that structured tension management determines competitive outcomes that adoption velocity cannot replicate.

Why AI deployment velocity destroys competitive advantage

Research across 20 European countries demonstrates failure patterns executives refuse to acknowledge. Workers in highly automated environments report reduced purpose, diminished control, and elevated stress levels despite technically easier work. The technology worked. The integration destroyed competitive positioning.

Polish endoscopists using AI for cancer detection achieved improved diagnostic accuracy. Their performance on standard non-AI procedures simultaneously deteriorated. Stanford research on software developer hiring patterns demonstrates market validation: entry-level developer demand declined as AI-generated code flooded systems, while senior engineer hiring accelerated. Markets reward integration expertise over deployment velocity.

Students employing AI for SAT essay generation initially demonstrated creativity spikes. Deeper analysis revealed alpha-wave activity reduction (biological markers of creative flow) alongside dangerous convergence patterns. Their outputs became "very, very similar" to competitors. The technology worked. The outcomes destroyed differentiation.

AI deployment evolution demonstrates the difference between adoption sophistication and systematic intelligence:

Phase 1: Tool deployment with usage metrics producing adoption theater

Phase 2: Integration attempts without tension frameworks creating systematic failure

Phase 3: Structured intelligence through tension management enabling competitive positioning

Sustainable advantage emerges through systematic implementation over deployment velocity.

The methodology that market leaders discovered

Organizations achieving breakthrough advantages operate through fundamentally different implementation philosophies. They separate tool deployment from tension management by building comprehensive frameworks that reveal positioning opportunities unavailable through traditional adoption approaches.

The Integration Intelligence Formula:

Tension identification + Management frameworks + Implementation discipline = Competitive positioning advantage

5 systems that transform adoption theater into strategic engines

System 1: The Expert-Novice Integration Engine

Transform AI implementation from generalist empowerment into expertise coordination that creates competitive positioning independent of deployment velocity.

Integration Protocol

Establish implementation intelligence through systematic expert involvement instead of novice democratization. Duolingo board member John Lilly validates this approach through non-engineers prototyping chess instruction in four months. "If you bring experts in too early, they'll tell you all the reasons it won't work. AI let the non-engineers show what was possible, fast."

Google teams shifted from documentation to "prototype-first" approaches using vibe coding. Multiple insiders confirm they build working demonstrations before drafting proposals, accelerating iteration velocity. But unmanaged novice empowerment creates what researchers identify as "AI slop" (outputs appearing convincing that collapse under scrutiny).

Stanford employment data demonstrates market correction: companies now rely on experts to battle such deterioration while entry-level developer hiring declines.

Implementation Design

At Stitch Fix, algorithms scanned inventory and customer preferences to flag unmet requirements. Custom systems generated design suggestions based on identified gaps. Instead of allowing automated production approval, Stitch Fix routed suggestions to human designers who determined brand alignment, quality standards, and customer resonance. The algorithms expanded creative options. The experts reduced possibilities to implementation-worthy initiatives.

TELUS VP of AI Alexandre Guilbault warns against keeping high performers overly bound to operational duties. "The best people are the ones who can drive the biggest transformation, but often organizations want to keep their best folks in operations." Leaders must bring top employees (clinicians, technicians, HR professionals, data experts) from trenches to test AI models and participate in pilots from the start, even if it slows short-term execution.

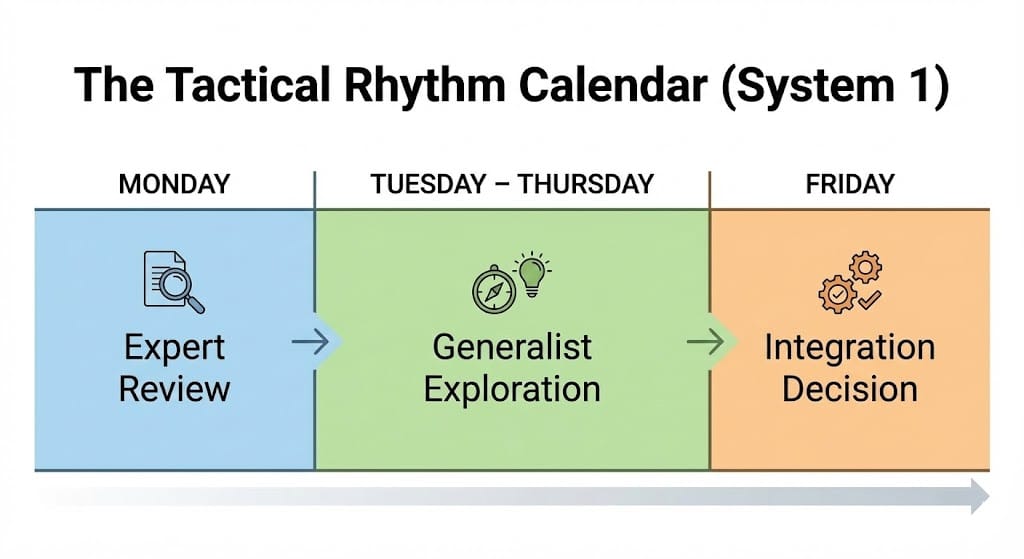

Tactical Rhythm

Expert review sessions every Monday analyze the previous week's AI-generated prototypes in two-hour blocks focused on feasibility assessment and quality validation. Generalist teams spend Tuesday through Thursday developing AI-assisted concepts without expert intervention, enabling rapid exploration without premature constraints. Friday integration meetings (90 minutes maximum) determine which approaches merit production pathways through documented expert sign-off.

Competitors chase prototype volume metrics. Organizations implementing phased expertise capture market positioning through superior production conversion rates. The competitive gap widens as deployment-focused companies flood systems with low-quality outputs requiring expensive remediation while structured approaches establish quality advantages that become difficult to replicate.

System 2: The Governance Velocity Accelerator

Deployment approaches optimize either centralization control or decentralized freedom. Integration engineering builds governance frameworks that enable velocity through systematic risk allocation over approval sophistication.

Governance Intelligence Strategy

Organizations doubling down on centralization create AI centers of excellence enforcing standards, managing risk, and controlling access. When every pilot requires legal, security, and procurement review navigation, innovation dies in committees and botched handoffs. By approval arrival, technology has already advanced.

Excessive decentralization enables innovation outpacing implementation. Teams sprint in different directions. Dashboards multiply. Automated solutions overlap and clash. UC Santa Barbara professor Paul Leonardi identifies the resulting overload as digital exhaustion.

One U.S. university demonstrates structural advantage through bifurcated governance. The central IT excellence center manages high-risk domains including data governance, integration, and infrastructure ensuring consistency and security. Individual schools run decentralized pilots to accelerate testing and idea validation.

Risk Allocation Framework

The university CIO established governance allocation methodology distinguishing high risk, low experimentation domains (data security, compliance) that require centralized decision authority, enterprise-wide standards, and formal approval processes from low risk, high experimentation domains (workflow automation, prompt testing) that enable decentralized functional team control, rapid iteration cycles, and post-deployment review protocols.

Booking.com HR teams expressed extreme nervousness about introducing AI search platforms. They worried about exposing sensitive employee information to colleagues during company resource searches. Senior engineering manager Tadeu Faedrich explains: "We didn't want people finding documents they shouldn't have access to." They implemented systems ensuring AI searches only reveal information employees have permission to view or access, enabling employees to use tools freely without approval delays or privacy concerns.

Governance Assessment

Track centralized decisions requiring over five business days for approval. If three or more per month, evaluate for decentralization potential to accelerate velocity. Simultaneously track decentralized implementations creating security incidents or integration conflicts. If two or more per quarter, evaluate for centralization requirements to improve coordination. This works because governance stays flexible, not frozen in permanent structure.

Competitors building flexible frameworks capture positioning advantages while centralization-dependent organizations watch opportunities expire in approval processes and decentralization-chaotic companies waste resources on fragmented, incompatible initiatives.

System 3: The Hierarchy Optimization Protocol

Consensus wisdom declares flatter structures accelerate decision velocity. Integration intelligence reveals how structural changes without workflow redesign simply shift and magnify destructive friction.

Structural Intelligence Development

Many organizations (particularly technology companies) embrace "flatter is better" assumptions. As AI automates reporting and routine decisions, executives assume they can remove layers and enable acceleration. Hierarchy harnesses complexity when designed correctly, destroys momentum when restructured blindly.

Michael Arena, former General Motors chief talent officer and veteran social network researcher, discovered that excessive flattening results in overloaded managers creating bottlenecks as they fail to keep pace with decisions, crises, and operational duties. When managers lead more than seven people, they often work 10-13 hour days while struggling to complete responsibilities, producing burnout and organizational paralysis.

Assessment Framework

Arena suggests evaluating how people work across two modes before structural changes: heads-down work not requiring nuanced coordination (coding, writing, data analysis) versus heads-up work requiring interdependence and two-way communication (customer relationship building, team brainstorming, strategic communication).

If work is predominantly heads-down execution by people working without tight coordination, use AI agents to assume routine tasks and lighten manager loads, enabling larger team leadership. If work demands heads-up engagement, maintain smaller reporting structures and reinvest efficiency gains so managers spend more time communicating, exercising judgment, and strengthening relationships.

Management Load Calculation

Arena's research establishes clear breaking points. Managers leading seven or fewer direct reports maintain sustainable workload patterns. Beyond seven, coordination burden multiplies nonlinearly. Every additional direct report doesn't add incremental work but exponential communication pathways requiring management attention. Count direct reports, then multiply by average weekly coordination hours per person. If total exceeds 20 hours weekly, your structure has already created bottlenecks regardless of AI tool deployment.

Workday VP of people analytics Phil Wilburn stopped requiring teams to build briefing decks and weekly update documentation. Unstructured data from Slack conversations and project plans now flows into single AI systems. Before meetings, Wilburn asks AI to compile briefs or investigate topics deeper. His team no longer wastes hours piecing together updates or answering quick questions. AI hasn't replaced management (it's removed administrative sludge preventing effective leadership).

When competitors flatten structures chasing velocity myths, organizations implementing workflow-informed hierarchy decisions establish coordination advantages that become increasingly difficult to replicate as competitors discover their structural changes created new bottlenecks instead of eliminating existing friction.

System 4: The Implementation Tempo Calibrator

Executives want to be seen "doing AI" (and velocity has emerged as evidence they're doing it correctly). Excessive speed and impatience fuels decision-implementation gaps where leaders move quickly to adopt tools but don't slow down enough to fix broken systems or understand how technology fits within existing constraints, habits, and workflows.

Tempo Intelligence Strategy

Northwestern University professor Hatim Rahman describes a hospital project where PhD student Jodie Koh studies how AI is being introduced to improve medical diagnostic access. Training AI models requires thousands of ultrasound images, especially repeat scans of the same patients over time.

Efficiency pressures in healthcare taught clinicians to minimize images per patient to save time and money. Obtaining patient consent to use images creates additional delays. Progress slows further because imaging departments needing collaboration to implement AI tools often do not (sometimes maintaining histories of nasty interdepartmental conflict).

Technicians resist the AI project fearing resulting data will monitor performance, increase workloads, or justify job cuts. The image assembly process drags on far longer than leaders expected when implementation began. Fast deployment decisions. Slow integration reality. Zero competitive advantage.

80% project failure rates stem from this temporal mismatch. Organizations announce AI initiatives in weeks but ignore that implementation requires months of workflow redesign, stakeholder alignment, and cultural adaptation. This disconnection burns resources on announced initiatives that never reach sustainable operation while tempo-calibrated competitors capture positioning.

Strategic Velocity Framework

Investor James Cham at Bloomberg Beta describes an AI-native startup attempting to avoid this drag. They cordoned off a small team from the rest of the company, moved them into separate offices, and gave clear mandates: Build end-to-end workflows software could run without human involvement.

In practice, this meant shifting "management" work from overseeing people to overseeing AI-based tools performing work themselves (tools that could review, write, and fix code based on bugs detected in production). The separation and isolation allowed faster movement than the broader organization, though it remains uncertain whether that velocity will translate into sustainable company-wide adoption.

Implementation Pacing Standards

Perry Klebahn leads Stanford d.school's Launchpad accelerator where more than 100 companies have been founded in the past decade. Klebahn reports AI makes it far easier than in the past for founders to generate prototypes and iterate quickly.

But that very ease sometimes dampens deep commitment, determination, and ownership founders need to develop promising ideas into viable companies. "Founders used to say 'my idea' or 'our prototype.' Now they say 'this idea.'" When ideas come too easily, people stop fighting for them. Build speed bumps into creative and strategic work including checkpoints, incubation periods, and reflection rituals. Psychologists call this the "labor leads to love" effect: The harder we work for something, the more we value it to justify efforts to ourselves and others.

Distinguish fast-track decisions (two-way door choices with reversible consequences requiring 48-hour decision cycles maximum including workflow automation, tool testing, and prompt refinement) from slow-track decisions (one-way door choices with lasting organizational impact requiring minimum two-week evaluation periods including hiring changes, system architecture, and integration partnerships). Track how many slow-track decisions are being rushed through fast-track processes. That count reveals where deployment theater is destroying competitive positioning while tempo-calibrated competitors establish sustainable implementation advantages.

System 5: The Adoption Momentum Protocol

Traditional thinking assumes AI transformation should be led from the top or driven by peers. Integration intelligence reveals how momentum requires coordinated pressure across organizational levels instead of choosing between top-down authority and bottom-up enthusiasm.

Momentum Intelligence Development

Worklytics data shows teams were twice as likely to adopt AI tools when managers used them first. Yet excessive top-down pressure for any change sparks resistance and performative compliance (employees checking boxes instead of changing how they work).

Too much reliance on bottom-up efforts and energy produces fragmentation and fatigue (pockets of experimentation that never scale). One CTO compared it to having hundreds of little speedboats racing in different directions, slowing the company because efforts aren't coordinated.

Rhythm Framework

At a Fortune 20 retailer, the CEO maintains AI as a standing topic in monthly meetings with hundreds of VPs. A cross-functional steering committee meets regularly to align adoption, governance, and use cases across finance, HR, technology, and operations. Departmental staff meetings end with identical rituals: brief "AI moments" where leaders take turns sharing what they've tried, what's working, and what's not. AI became operating rhythm, not side projects.

Impact Measurement Standards

Companies measure AI activity (clicks, logins, or prompt counts) not impact. One Fortune 100 company ranked employees by AI-tool usage and sent weekly leaderboards. Another tied "AI activity" to performance reviews. Both created adoption theater without competitive positioning.

Zendesk senior VP of engineering Nan Guo explains how her team built balanced scorecards of six engineering productivity metrics instead of tracking superficial usage indicators: five operational (cycle time, code review cycle time, merge frequency, change failure rate, number of deploys) and one engagement metric capturing how engineers feel about their tools. Focusing on impact gives people clearer guidance on what leaders value from the top, enabling teams from the bottom to adopt AI in ways actually improving work.

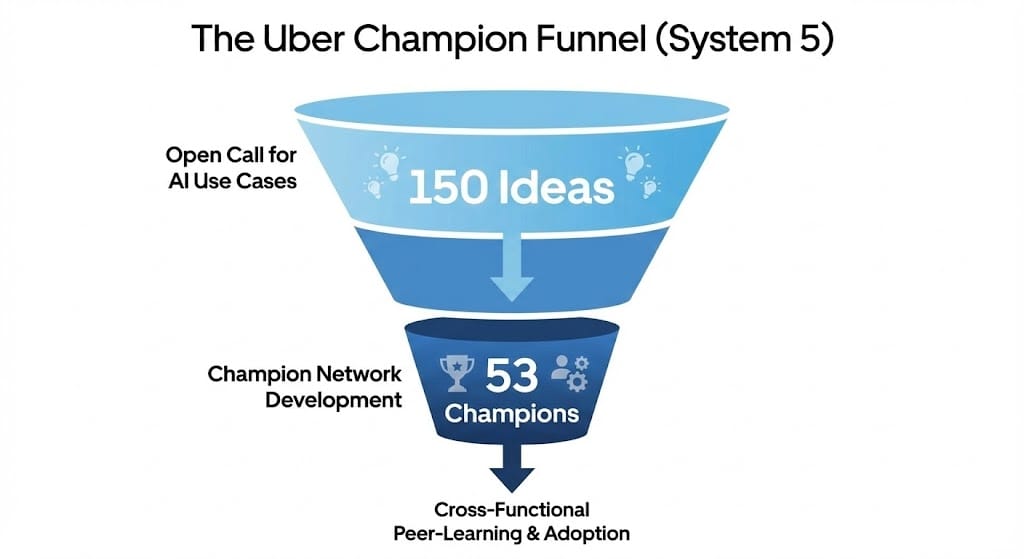

Champion Network Development

At Uber, enterprise gen AI strategy lead Manjari Agochiya launched an open call for AI use cases. It surfaced approximately 150 ideas, but more importantly revealed 53 early AI champions across functions (employees already experimenting, sharing lessons, and building prototypes). They became Uber's first real network of AI champions, forming the backbone of internal peer-learning communities.

Champion networks in other organizations consistently boost engagement, strengthen cross-functional morale, and open up visibility opportunities (especially for non-technical employees who emerge as early advocates). Organizations maintaining standing AI agenda items in executive meetings while simultaneously building peer champion networks achieve adoption that translates into measurable business outcomes. Competitors pursuing only top-down mandates or only bottom-up enthusiasm remain trapped in deployment theater that produces activity metrics without competitive advantage accumulation.

Structured tension management transforms deployment theater

Implementation intelligence requires equivalent resources as deployment approaches, simply allocated toward systematic tension management over adoption sophistication.

Organizations implementing methodical frameworks consistently outperform deployment-dependent competitors while tool-focused companies experience strategic limitations during competitive pressure periods.

The transformation window narrows as market leaders discover tension-enabled advantages while establishing competitive positioning that deployment sophistication cannot replicate.

Companies implementing these systems within the next 90 days establish competitive advantages that velocity-dependent executives cannot replicate through adoption sophistication alone.

The choice determines competitive survival. The window closes. The consequences are permanent.